Project

Gestural Semantics

Exploring how physical form can communicate gestural interaction patterns

Overview

As gestural interfaces emerged with potential to enter mainstream consumer products, this research project explored a fundamental question: how can physical form communicate the gestures needed for interaction?

Using a radio as the platform—an object whose physicality is primarily about interaction—I developed a methodology to understand how form language can guide users toward correct gestural inputs without training or instruction.

Research Methodology

The research began with understanding gestural memory and affordances through a multi-phase approach:

Phase 1: Gestural Memory Study

I conducted interviews with colleagues, asking them to perform common kitchen activities—making tea, fetching items from the refrigerator—without any objects present. The goal was to understand whether people needed physical objects as visual cues to recall gestures, or if they could perform actions from memory alone.

This revealed that many gestures are deeply embodied and can be performed without environmental prompts, suggesting that form could tap into existing gestural vocabularies.

03 Interview participants performing kitchen activities without objects present

Phase 2: Room-Scale Installation

I created a room-scale installation with various abstract objects at different scales. Participants moved through the space interacting with these forms without knowing their intended function. This phase revealed how physical properties—scale, orientation, surface qualities—prompted specific gestural responses.

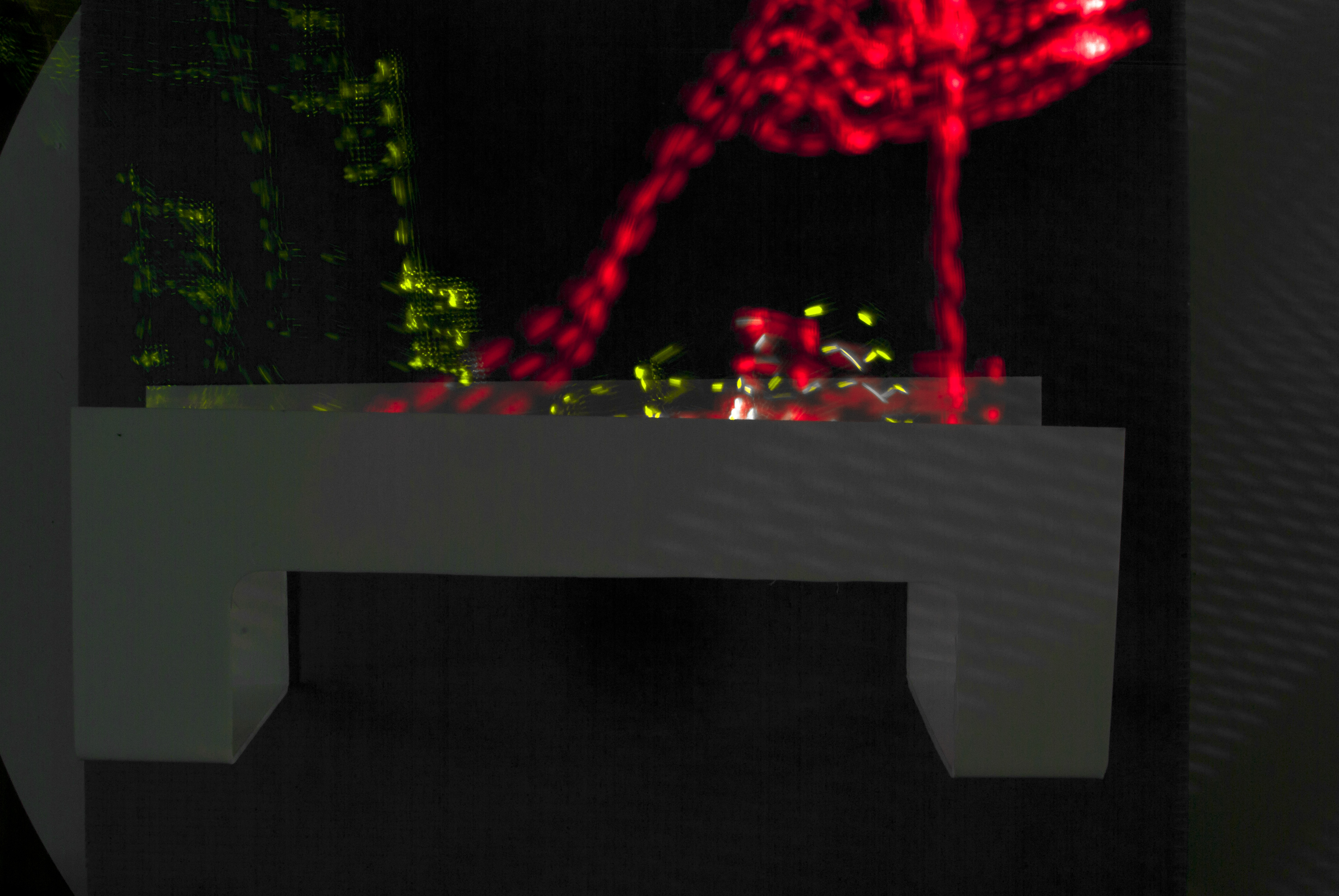

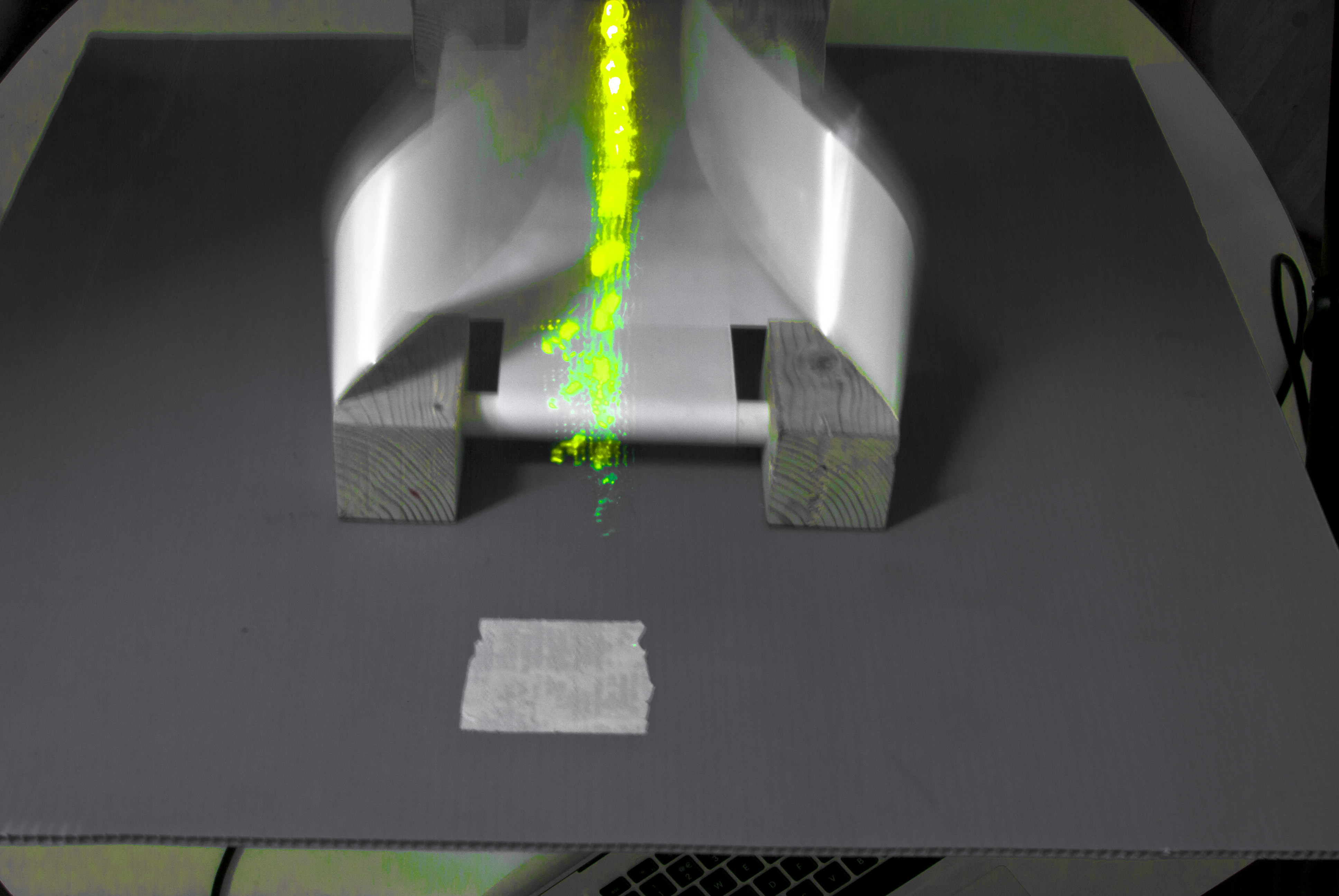

Phase 3: LED Motion Tracking

The final research phase used long-exposure photography with LEDs attached to participants' hands (red and green) to capture the first 20 seconds of interaction with test objects. This technique made gestural patterns visible and measurable.

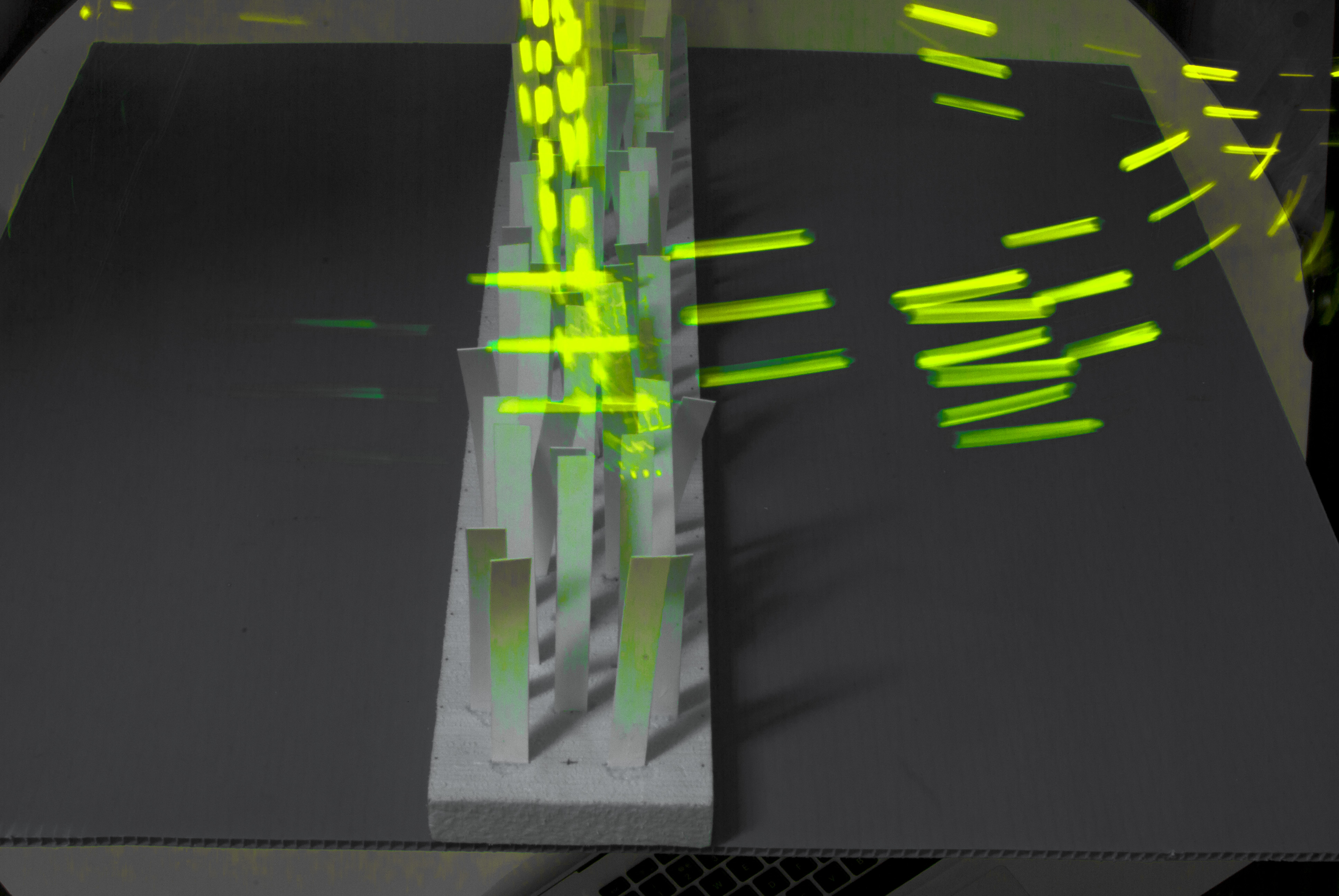

Iterative Form Development

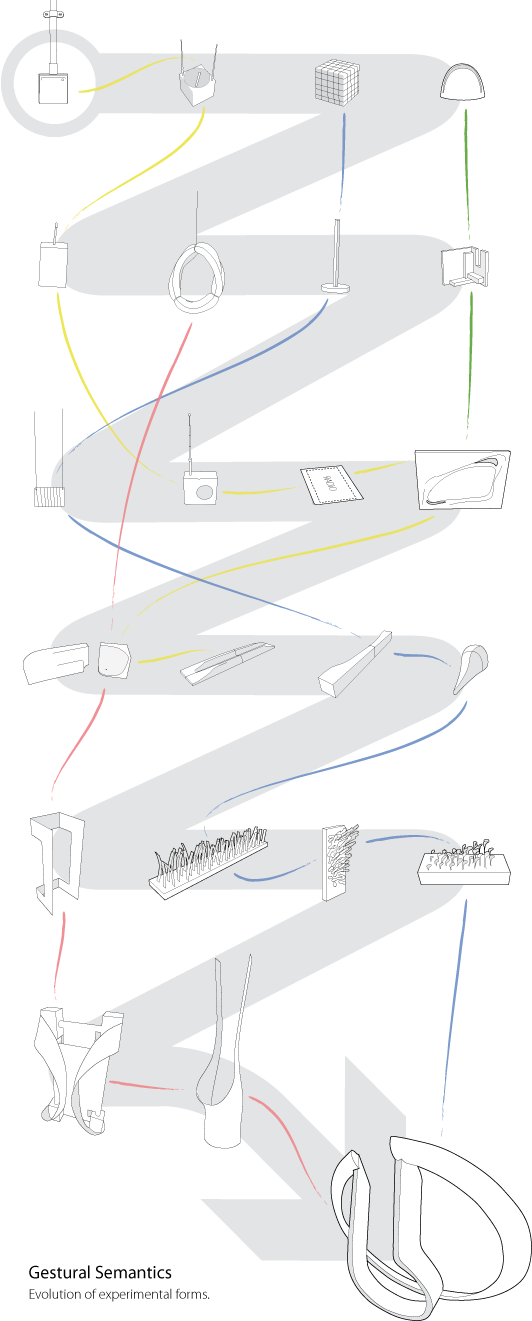

Forms that provoked consistent reactions across users were developed further, leading to an evolution of experimental prototypes. Each iteration refined the relationship between physical form and gestural affordance.

The diagram shows the progression from initial explorations through multiple test forms, each refining the relationship between physical properties and gestural response until arriving at the final radio design.

08 Evolution of experimental forms through iterative user testing

Sensor Technology Development

Translating the gestural insights into a functional prototype required developing custom sensing technology. This became a significant technical challenge, requiring multiple iterations to achieve reliable gesture detection.

First Approach: Capacitive Sensing

I initially developed capacitive sensors based on aluminum foil pads connected to an Arduino Micro. These sensors were remarkably effective, detecting hand movements at distances up to 20cm. However, they proved unreliable in practice—environmental factors and interference made consistent operation difficult.

09 Early capacitive sensing prototype showing effective but ultimately unreliable detection

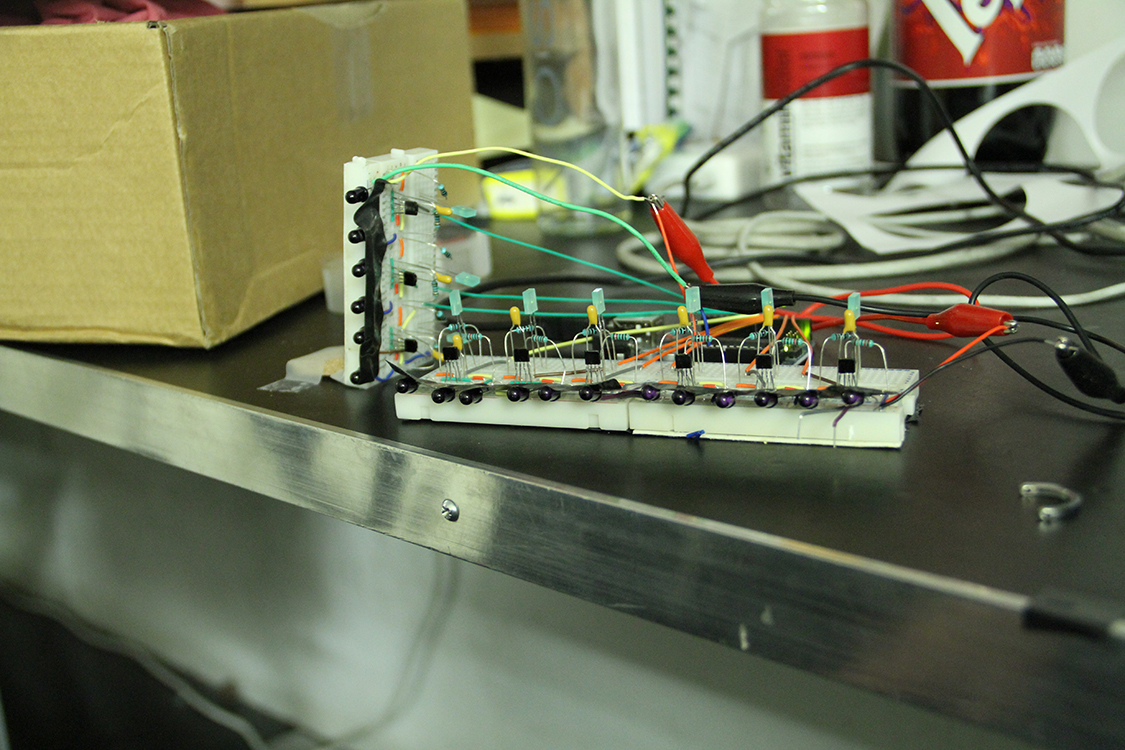

Second Approach: Infrared Frequency Modulation

The solution came from switching to infrared LED sensors controlled at specific frequencies. By transmitting each sensor at a unique frequency, multiple sensors could operate in series—each recognizing when its own modulated light reflected back from the user's hands.

This approach eliminated interference between sensors while maintaining detection reliability. I designed custom circuit boards and manufactured them to integrate this technology into the final radio form.

Design Outcome

The final radio operates through two intuitive gestures:

- Volume control: Up and down hand movement between the vertical elements

- Tuning: Side to side hand movement over the outer ring

The form itself teaches the interaction—no buttons, no labels, no instructions needed. The physical language of the object communicates its operation.

Impact

This project established a methodology for designing gestural interfaces that remains relevant as touchless interaction continues evolving. The core principle—that form can encode interaction semantics—applies across gestural systems from smartphones to spatial computing.

What I Learned:

The most valuable lesson was trusting user behavior over designer intuition. By observing how people naturally interacted with forms, the design emerged from human patterns rather than imposed assumptions. This research-driven approach to interaction design showed me that the best interfaces don't teach users—they respond to innate human tendencies.

The LED motion tracking technique proved that systematic observation can reveal interaction patterns invisible to casual testing. Good interaction design requires understanding not just what users do, but why they do it.

Get in touch for more information or to book a consulation.

Contact MeMore

Project

Clean Sky 2 - Next Generation Cockpit

Reducing pilot cognitive load through adaptive interface design for a potential civilian Airbus C295

Reducing pilot cognitive load through adaptive interface design for a potential civilian Airbus C295

See Project